What is PVD-AL?

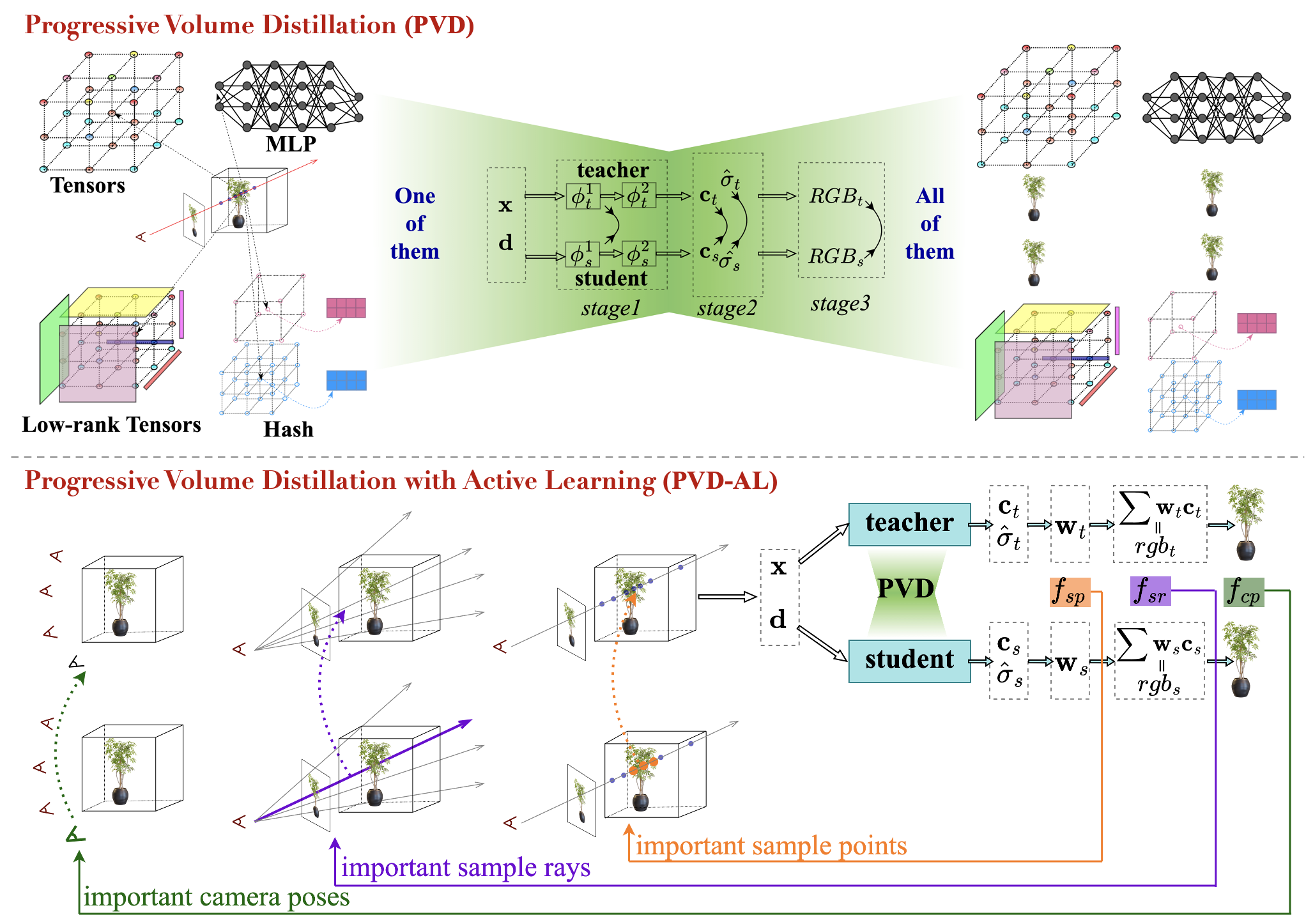

Progressive Volume Distillation with Active Learning (PVD-AL) is a systematic distillation method that enables any-to-any conversions between different architectures. PVD-AL decomposes each structure into two parts and progressively performs distillation from shallower to deeper volume representation, leveraging effective information retrieved from the rendering process. Additionally, a Three-Levels of active learning technique provides continuous feedback during the distillation process regarding which camera poses, sample rays, and sample points are challenging to fit. After receiving this feedback, students will actively increase their attention to this crucial knowledge, resulting in high-performance distillation results.

What PVD-AL can do?

1. mutual-conversion between different NeRF architectures

PVD-AL allows conversions between different NeRF architectures, including MLP, sparse Tensors, low-rank Tensors and hashtables, breaking down the barrier of independent research between them and reaching their full potential.

2. reduce training time and compress model parameters

With PVD-AL, an MLP-based NeRF model can be distilled from a hashtable-based Instant-NGP model at a 10X ~ 20X faster speed than being trained the original NeRF from scratch, with smaller model parameters.

3. improve model performance

With the help of PVD-AL, using a high-performance teacher, such as hashtables and VM-decomposition structures, frequently improves student model synthesis quality compared with training the student from scratch.

4. characteristic migration

PVD-AL allows for the fusion of various properties between different structures. Calling PVD-AL multiple times can obtain models with multiple editing properties. It is also possible to convert a scene under a specific model to another model that runs more efficiently to meet the real-time requirements of downstream tasks.

5. active learning as a plug-in

The three levels of active learning strategies in PVD-AL are decoupled, flexible, and highly versatile, thus can be also easily applied as plug-in to other distillation tasks that use NeRF-based model as a teacher or student.